SafePub: A Truthful Data Anonymization Algorithm With Strong Privacy Guarantees

Authors: Raffael Bild (Technical University of Munich, Germany), Klaus A. Kuhn (Technical University of Munich, Germany), Fabian Prasser (Technical University of Munich, Germany)

Volume: 2018

Issue: 1

Pages: 67–87

DOI: https://doi.org/10.1515/popets-2018-0004

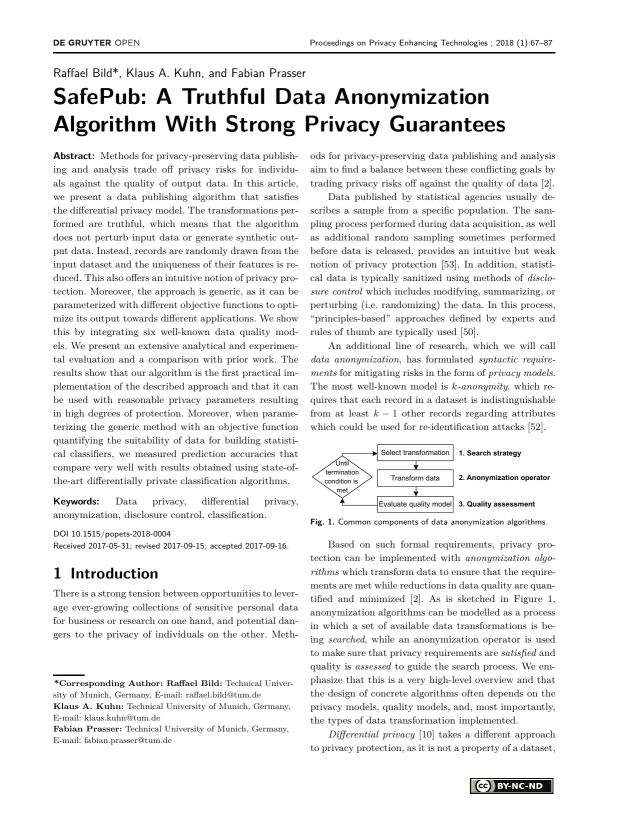

Abstract: Methods for privacy-preserving data publishing and analysis trade off privacy risks for individuals against the quality of output data. In this article, we present a data publishing algorithm that satisfies the differential privacy model. The transformations performed are truthful, which means that the algorithm does not perturb input data or generate synthetic output data. Instead, records are randomly drawn from the input dataset and the uniqueness of their features is reduced. This also offers an intuitive notion of privacy protection. Moreover, the approach is generic, as it can be parameterized with different objective functions to optimize its output towards different applications. We show this by integrating six well-known data quality models. We present an extensive analytical and experimental evaluation and a comparison with prior work. The results show that our algorithm is the first practical implementation of the described approach and that it can be used with reasonable privacy parameters resulting in high degrees of protection. Moreover, when parameterizing the generic method with an objective function quantifying the suitability of data for building statistical classifiers, we measured prediction accuracies that compare very well with results obtained using state-ofthe-art differentially private classification algorithms.

Keywords: Data privacy, differential privacy, anonymization, disclosure control, classification.

Copyright in PoPETs articles are held by their authors. This article is published under a Creative Commons Attribution-NonCommercial-NoDerivs 3.0 license.