Individualized PATE: Differentially Private Machine Learning with Individual Privacy Guarantees

Authors: Franziska Boenisch (Vector Institute), Christopher Mühl (Free University Berlin), Roy Rinberg (Columbia University), Jannis Ihrig (Free University Berlin), Adam Dziedzic (University of Toronto, Vector Institute)

Volume: 2023

Issue: 1

Pages: 158–176

DOI: https://doi.org/10.56553/popets-2023-0010

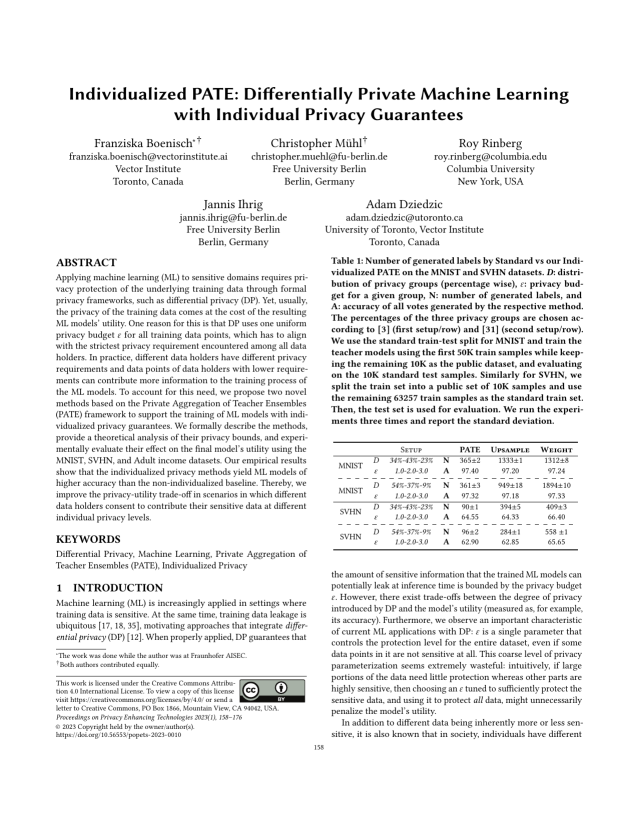

Abstract: Applying machine learning (ML) to sensitive domains requires privacy protection of the underlying training data through formal privacy frameworks, such as differential privacy (DP). Yet, usually, the privacy of the training data comes at the cost of the resulting ML models' utility. One reason for this is that DP uses one uniform privacy budget epsilon for all training data points, which has to align with the strictest privacy requirement encountered among all data holders. In practice, different data holders have different privacy requirements and data points of data holders with lower requirements can contribute more information to the training process of the ML models. To account for this need, we propose two novel methods based on the Private Aggregation of Teacher Ensembles (PATE) framework to support the training of ML models with individualized privacy guarantees. We formally describe the methods, provide a theoretical analysis of their privacy bounds, and experimentally evaluate their effect on the final model's utility using the MNIST, SVHN, and Adult income datasets. Our empirical results show that the individualized privacy methods yield ML models of higher accuracy than the non-individualized baseline. Thereby, we improve the privacy-utility trade-off in scenarios in which different data holders consent to contribute their sensitive data at different individual privacy levels.

Keywords: Differential Privacy, Machine Learning, Private Aggregation of Teacher Ensembles (PATE), Individualized Privacy

Copyright in PoPETs articles are held by their authors. This article is published under a Creative Commons Attribution 4.0 license.