Leaky Diffusion: Attribute Leakage in Text-Guided Image Generation

Authors: Anastasios Lepipas (Imperial College London), Marios Charalambides (Imperial College London), Jiani Liu (Imperial College London), Yiying Guan (Imperial College London), Dominika C Woszczyk (Imperial College London), Mansi (Imperial College London), Thanh Hai Le (Imperial College London), Soteris Demetriou (Imperial College London)

Volume: 2025

Issue: 4

Pages: 275–292

DOI: https://doi.org/10.56553/popets-2025-0130

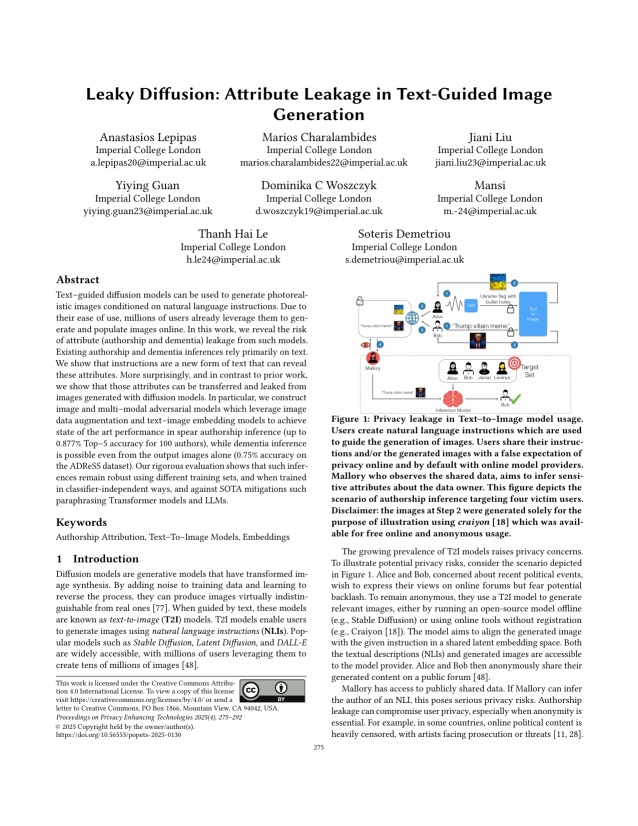

Abstract: Text-guided diffusion models can be used to generate photorealistic images conditioned on natural language instructions. Due to their ease of use, millions of users already leverage them to generate and populate images online. In this work, we reveal the risk of attribute (authorship and dementia) leakage from such models. Existing authorship and dementia inferences rely primarily on text. We show that instructions are a new form of text that can reveal these attributes. More surprisingly, and in contrast to prior work, we show that those attributes can be transferred and leaked from images generated with diffusion models. In particular, we construct image and multi-modal adversarial models which leverage image data augmentation and text-image embedding models to achieve state of the art performance in spear authorship inference (up to 0.877% Top-5 accuracy for 100 authors), while dementia inference is possible even from the output images alone (0.75% accuracy on the ADReSS dataset). Our rigorous evaluation shows that such inferences remain robust using different training sets, and when trained in classifier-independent ways, and against SOTA mitigations such paraphrasing Transformer models and LLMs.

Keywords: Authorship Attribution, Text-To-Image Models, Embeddings

Copyright in PoPETs articles are held by their authors. This article is published under a Creative Commons Attribution 4.0 license.